Tag: data visualisation

-

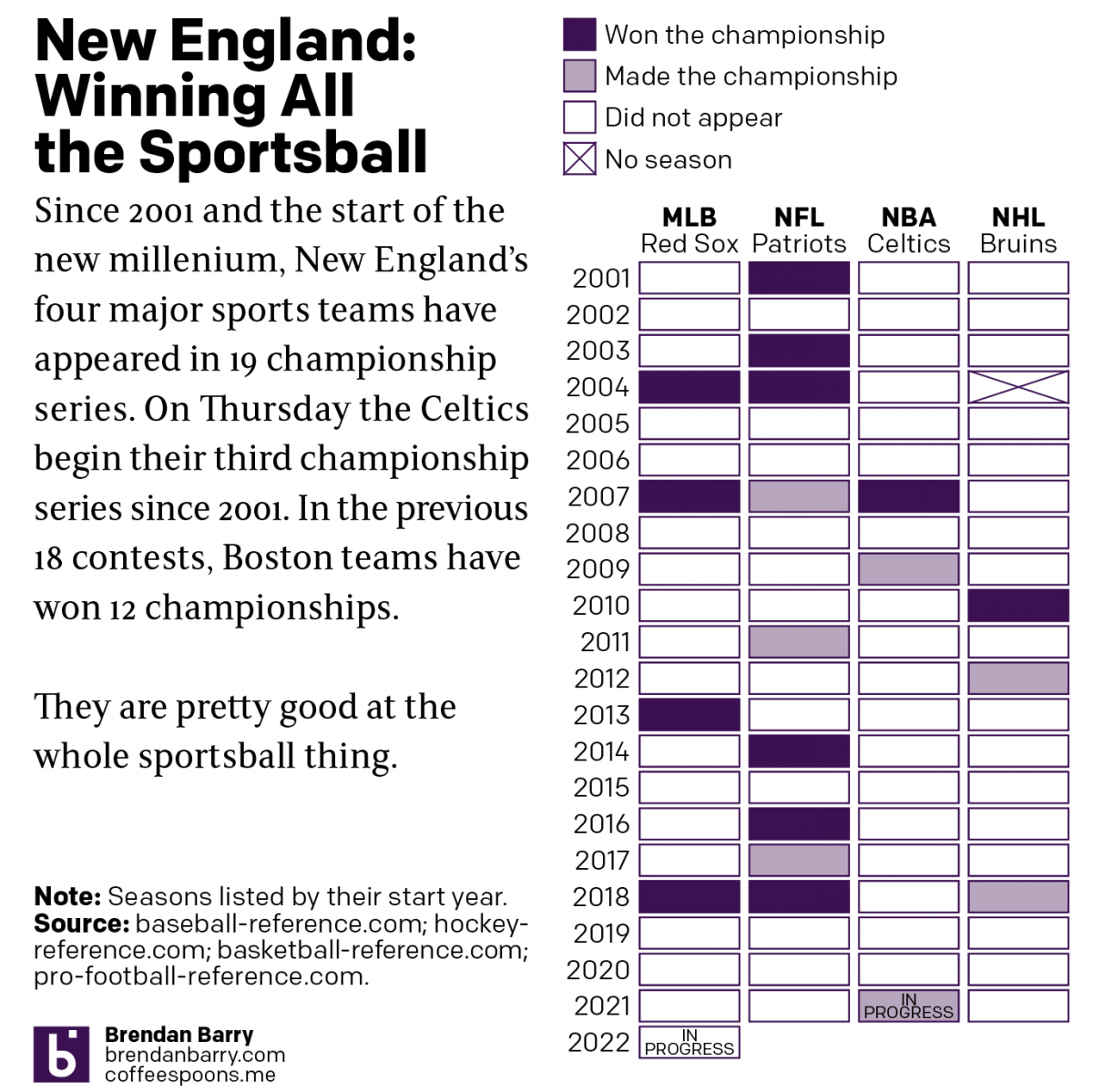

Boston: Sportstown of the 21st Century

Tonight the Boston Celtics play in Game 1 of the NBA Finals against the Golden State Warriors, one of the most dominant NBA teams over the last several years. But since the start of the new century and the new millennium, more broadly Boston’s four major sports teams have dominated the championship series of those…

-

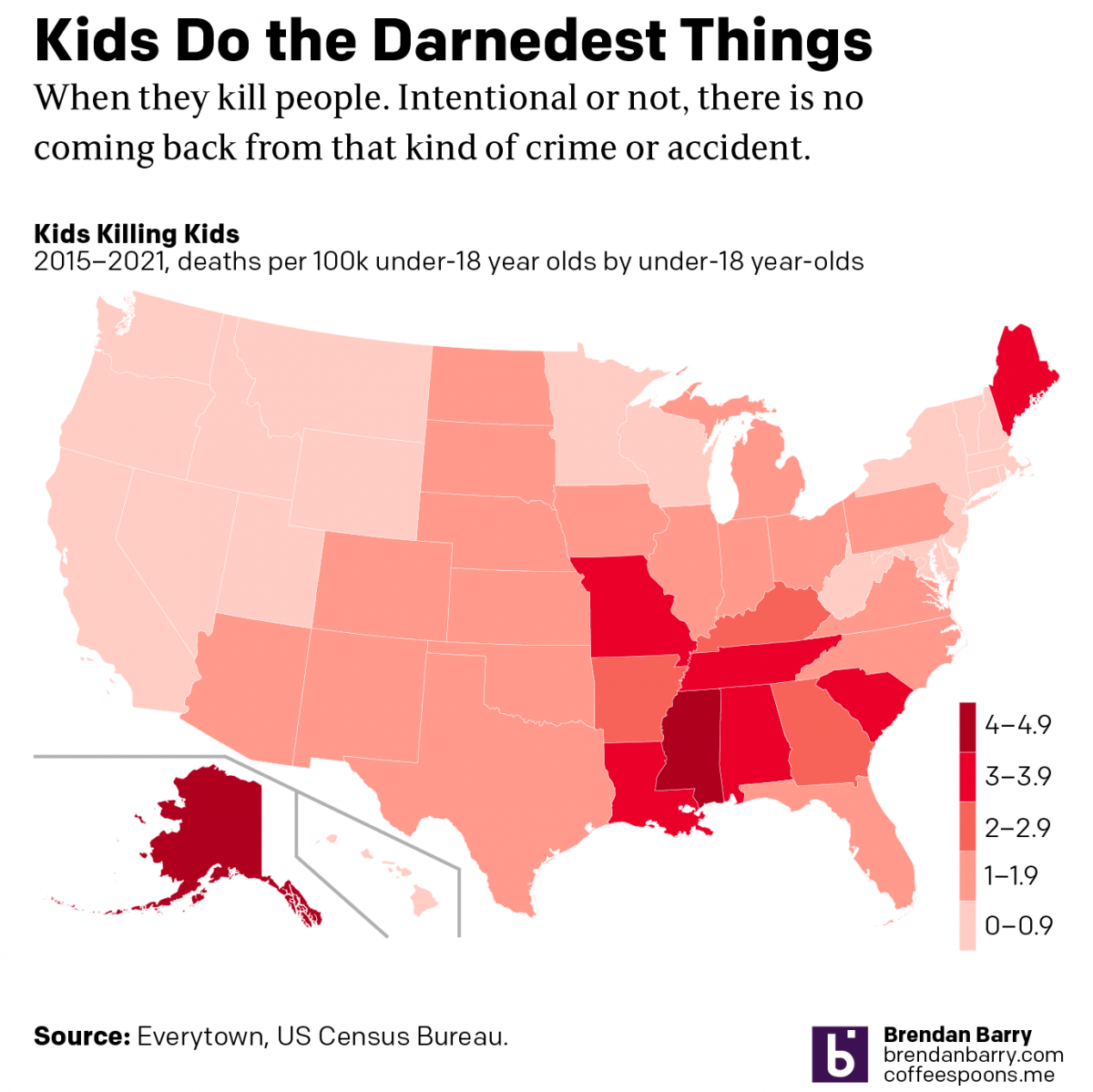

Kids Do the Darnedest Things: But Really They Do

Remember how just last week I posted a graphic about the number of under-18 year olds killed by under-18 year olds? Well now we have an 18-year-old shooting up an elementary school killing 19 students and two teachers. Legally the alleged shooter, Salvador Ramos, is an adult given his age. But he was also a…

-

The Shrinking Colorado River

Last week the Washington Post published a nice long-form article about the troubles facing the Colorado River in the American and Mexican west. The Colorado is the river dammed by the Hoover and Glen Canyon Dams. It’s what flows through the Grand Canyon and provides water to the thirsty residents of the desert southwest. But…

-

Whilst We Wait for Roe…

to be overturned by the Supreme Court, as seems likely, states have been busy passing laws to both restrict and expand abortion access. This article from FiveThirtyEight describes the statutory activity with the use of a small multiple graphic I’ve screenshot below. Each little map represents an action that states could have taken recently, for…

-

Kids Do the Darnedest Things: Shoot Other Kids

Last month, a 2-year old shot and killed his 4-year old sister whilst they sat in a car at a petrol station in Chester, Pennsylvania, a city just south of Philadelphia. Not surprisingly some people began to look at the data around kid-involved shootings. One such person was Christopher Ingraham who explored the data and…

-

One Million Covid-19 Deaths

This past weekend the United States surpassed one million deaths due to Covid-19. To put that in other terms, imagine the entire city of San Jose, California simply dead. Or just a little bit more than the entire city of Austin, Texas. Estimates place the number of those infected at about 80 million. Back of…